BT FTTP Upgrade from 350/50 to 910/110!

It’s been a while since I posted, and ironically my last post was about getting fiber to the premises installed. Well, I recently found out on my travels that I was able to upgrade from what was once the highest tier package.

I was originally on the 300/50 package, and I consistently got those speeds no problem. When I spotted that some areas could get an upgrade to a 910/110 package I was curious to see if I could and what it would cost. To my surprise, when I logged onto my BT account I saw that it was available for me too, and at the same price I was paying right now. So the first thing that went through my mind was why would I not upgrade? Pay the same and get more is never a bad thing, right?

I gave BT a call and spoke to one of their reps. He went through his usual script, and asked what I was using it for. I just said I was streaming a lot and downloading games, etc. to which he said maybe the upgrade would be a good thing. He had a look at what deals were available as he can sometimes get better deals than are shown online. Low and behold he got me a slightly better deal. Rather than £59.99 a month I’ll now be paying £57.99 a month for the 910/110 package. I wasn’t going to argue with that! Now I’m paying less and getting more!

It didn’t take long for him to sort it all out, but he said my activation date would be in 3 days. I was curious as to why a simple upgrade to a fiber line wouldn’t just be a configuration change done automatically, but I didn’t question it. 3 days later it went live and I didn’t even notice until I ran a speed test some time that day… OK, you got me, I was speed testing frequently and it just so happened to go live in between 2 of them!

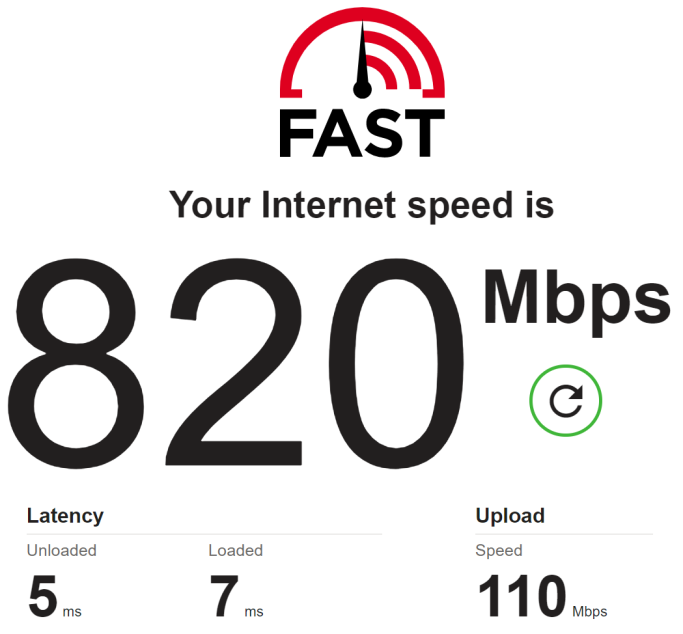

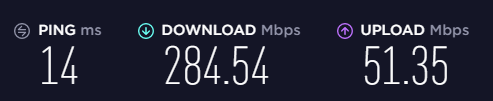

My first speed test was rather unspectacular (relatively speaking), with a speed of around 450Mbps down and 110Mbps up. I knew that speed tests started to get unreliable at those speeds, but I tried a couple more anyway for fun. I tried going to https://fast.com as they’re hosted a bit more locally. Boom! Pretty much full speed down and definitely full speed up!

Now once again of course this is a speed test so it doesn’t quite show the maximum, but even so it shows that the connection can still achieve far more than I was on previously. Now anyone that knows me should know that wasn’t enough, so I went out thinking of another way to test it. What better way than Steam when downloading a huge game. They have massive amounts bandwidth available and they’re well known for providing extremely good speeds (most of the time).

I found a large game that I had on my list and deleted it. In this case it was Destiny 2, an 86.8GB game. This was the result:

101.2MB/s peak! But that’s only(!) 810Mbps. Not quite as high as the speed test above though which was a shame, but still a great result because it was a real life transfer and not just a speed test.

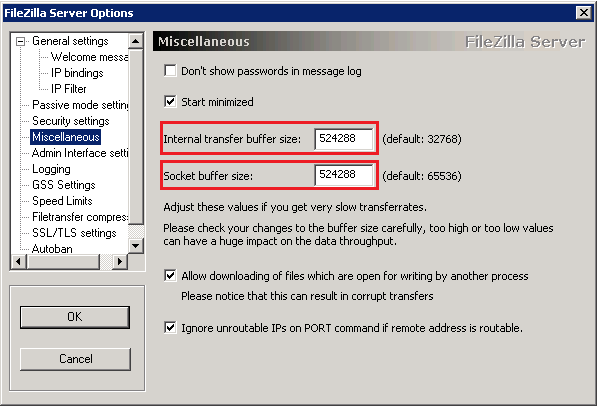

I’m not sure if there is a bottleneck in my setup or overheads are quite large, or maybe I just can’t quite get those speeds from single sources. Either way its proven itself to be extremely quick, and to be honest it’s rare that I’ll even use it anyway! I’ll need to run some tests on my pfSense router configuration to see if it’s a CPU bottleneck or not, but it’s not a big deal.

I can’t imagine that BT will make any faster services any time soon because that would require getting new Ethernet cards and other networking equipment for consumers, but I don’t think it’ll matter for some time. Almost gigabit Internet is going to be more than fast enough for even the most taxing households where there are half a dozen kids and several adults all wanting to do streaming, gaming or downloads. One of the LAN party events that I regularly attend has a 1Gbps symmetric line and it copes just fine with nearly 800 people using it, so if you are using more than this line can give then you probably (definitely!) need to get out more…!

For those of you who don’t know what a UPS is, it’s an uninterruptable power supply, or “battery backup” for your PC/servers and other electrical items which takes over when the mains fails. I have a couple of them, one for my PC, one for my server and one for my network equipment.

For those of you who don’t know what a UPS is, it’s an uninterruptable power supply, or “battery backup” for your PC/servers and other electrical items which takes over when the mains fails. I have a couple of them, one for my PC, one for my server and one for my network equipment. Although when I got the batteries they were not fully charged, I would have expected them to put out enough power to at least keep the PC’s online and/or boot them up. During testing they would switch to batteries just fine, but they would not start the PC from cold (powered off). This isn’t how a UPS is supposed to work, it should work both ways. During testing these batteries reached 2 minutes of sustained run time before encountering a low battery condition and shutting off. This wasn’t acceptable. The original batteries would have held the load for at least 10-15 minutes!ble. Despite that I carried on with testing.

Although when I got the batteries they were not fully charged, I would have expected them to put out enough power to at least keep the PC’s online and/or boot them up. During testing they would switch to batteries just fine, but they would not start the PC from cold (powered off). This isn’t how a UPS is supposed to work, it should work both ways. During testing these batteries reached 2 minutes of sustained run time before encountering a low battery condition and shutting off. This wasn’t acceptable. The original batteries would have held the load for at least 10-15 minutes!ble. Despite that I carried on with testing.